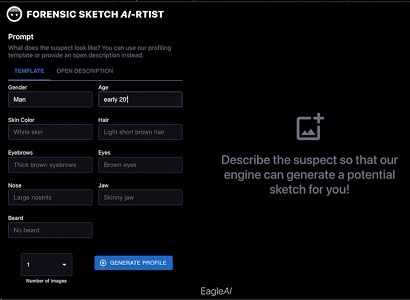

Screenshot of Forensic Sketch AI-rtist from the presentation uploaded to LabLab.

During a recent AI hackathon, two computer science experts from Portugal created a platform they hope will help speed the forensic sketch process.

Forensic Sketch AI-rtist, created by EagleAI, leverages the DALL-E 2 deep learning model to generate hyper-realistic sketches from a text description in just seconds.

DALL-E and DALL-E 2 are deep learning models developed by OpenAI to generate digital images from natural language descriptions. DALL-E was first revealed by OpenAI in a blog post in January 2021. In April 2022, OpenAI announced DALL-E 2, a successor designed to generate more realistic images at higher resolutions.

Currently, a forensic sketch artist takes around 2 to 3 hours to draw a suspect from a witness description. The artist and the witness usually must be in the same room, which can slow the process down by days, depending on availability.

“We’re trying to tackle a major pain point of forensic sketch artists [by] using AI to speed up the process,” said developers Artur Fortunato and Filipe Reynaud in a presentation about their new platform.

The computer engineers, who both received an MS in computer science from the Instituto Superior Tecnico in Portugal, developed the platform during LabLab’s recent OpenAI hackaton.

In the proposed solution, a human forensic sketch artists collects the suspect description from the witness. That description is uploaded into the client, which then sends the description to the server, where it is parsed and sent to DALL-E’s API. After a few seconds, the completed image sketch is sent back through the same route to the sketch artist.

When the sketch artist is inputting the witness description into Forensic Sketch AI-rtist, they can choose to use either a template with important features or an open description box with no restrictions. The fill-in template asks for: gender, age, skin color, hair color, type of eyebrows, eye color, nose description, jaw description, and type of beard. The platform can generate one to four sketches at a time.

Once the image is generated, the forensic sketch artist can end the process or download the sketch and perform small corrections before or while showing to the witness.

Fortunato and Reynaud say their next step is to conduct a product market fit to ensure the software is helpful and needed, before opening the platform up to beta users. In the meantime, they have their sights set on the development of two additional features.

“We want to give artists the possibility of applying witness feedback directly into the software by using DALL-E’s API fine-tuning feature,” the computer science experts said. “Beside this, we also think it’s important to integrate Whisper so we can provide a speech-to-text functionality to the end-users.”

Criticism and bias

The possibility of AI-generated forensic sketches raises many questions, including the ever-present topic of bias.

Soon after OpenAI released DALL-E 2 in April 2022, users noted bias, such as the caption “builder” only showing men, while the caption “flight attendant” only showed woman. At the time, OpenAI said that’s a limitation of the available training data. By June, however, OpenAI said it was attempting to fix biases by reweighing certain data. In July, the company said it was implementing new mitigation techniques to help DALL-E generate more diverse images of the world’s population—and it claimed that internal users were 12 times more likely to say images included people of diverse backgrounds.

Artificially generated images in general—not just those by OpenAI—have received criticism in the past. In October 2022, the Edmonton Police Service (Canada) released a DNA phenotyping image produced by Parabon in the hopes of identifying a suspect in a brutal 2019 sexual assault. The computer-generated image of a Black man was quickly and widely criticized as racist. Forty-eight hours later, the image was removed from the media release as well as social media sites, and the officer in charge of the sexual assault section issued an apology.

“The potential that a visual profile can provide far too broad a characterization from within a racialized community and in this case, Edmonton's Black community, was not something I adequately considered,” the officer said at the time.

Studies have continually found that face recognition algorithms and deep learning models have the poorest accuracy for Black, Latino and other minority communities. Add to that the fact that Black people are 5 times more likely to be stopped by police without cause than a white person, and the AI creation of hyper-realistic suspect profiles of possibly innocent people becomes a grave concern.

Additionally, eyewitness identifications are a leading factor in wrongful convictions. According to the Innocence Project, mistaken eyewitness identifications contributed to 69% of wrongful convictions overturned by post-conviction DNA evidence in the U.S. For example, in Brooklyn in 2020, the district attorney agreed to exonerate 25 wrongly convicted people in a five-year period between 2014 and 2019—24 of them Black and/or Latinx. According to the DA’s report, eyewitness misidentification was a contributing factor in the wrongful convictions of 20% of those people.